Ollama AI and its accompanying Web UI have recently captured the attention of developers and AI enthusiasts alike. This innovative framework enables users to deploy large language models (LLMs) locally, offering a unique alternative to traditional cloud-based AI services like ChatGPT. In this article, we will explore what Ollama AI and Ollama Web UI are, how to use them, and how they differ from other platforms.

Understanding Ollama AI

Ollama AI is a local deployment framework designed for running LLMs directly on users' machines. This approach allows for higher levels of privacy, as sensitive data does not need to be sent to any servers. Users can interact with various models without incurring costs associated with cloud services, making it an attractive option for businesses and individuals after affordable (and smart) solutions to their AI problems.

What is Ollama Web UI?

Ollama Web UI is a user-friendly interface that simplifies the interaction with Ollama AI. It provides a clean, intuitive design similar to popular chat interfaces, allowing users to easily create and manage model files, customize chat elements, and engage with multiple models simultaneously.

Key Features of Ollama Web UI

- Local Operation: The Web UI operates entirely offline, ensuring that user data remains secure.

- Multiple Model Support: Users can load various LLMs concurrently, enabling diverse interactions tailored to specific needs.

- Customizable Chat Elements: Users can create characters or agents within the chat interface, enhancing engagement by reflecting different communication styles.

- Document Uploads: The ability to upload documents allows users to provide context or reference material directly in their interactions with the model.

- Voice Input: The platform supports voice commands, making it accessible for users who prefer hands-free operation.

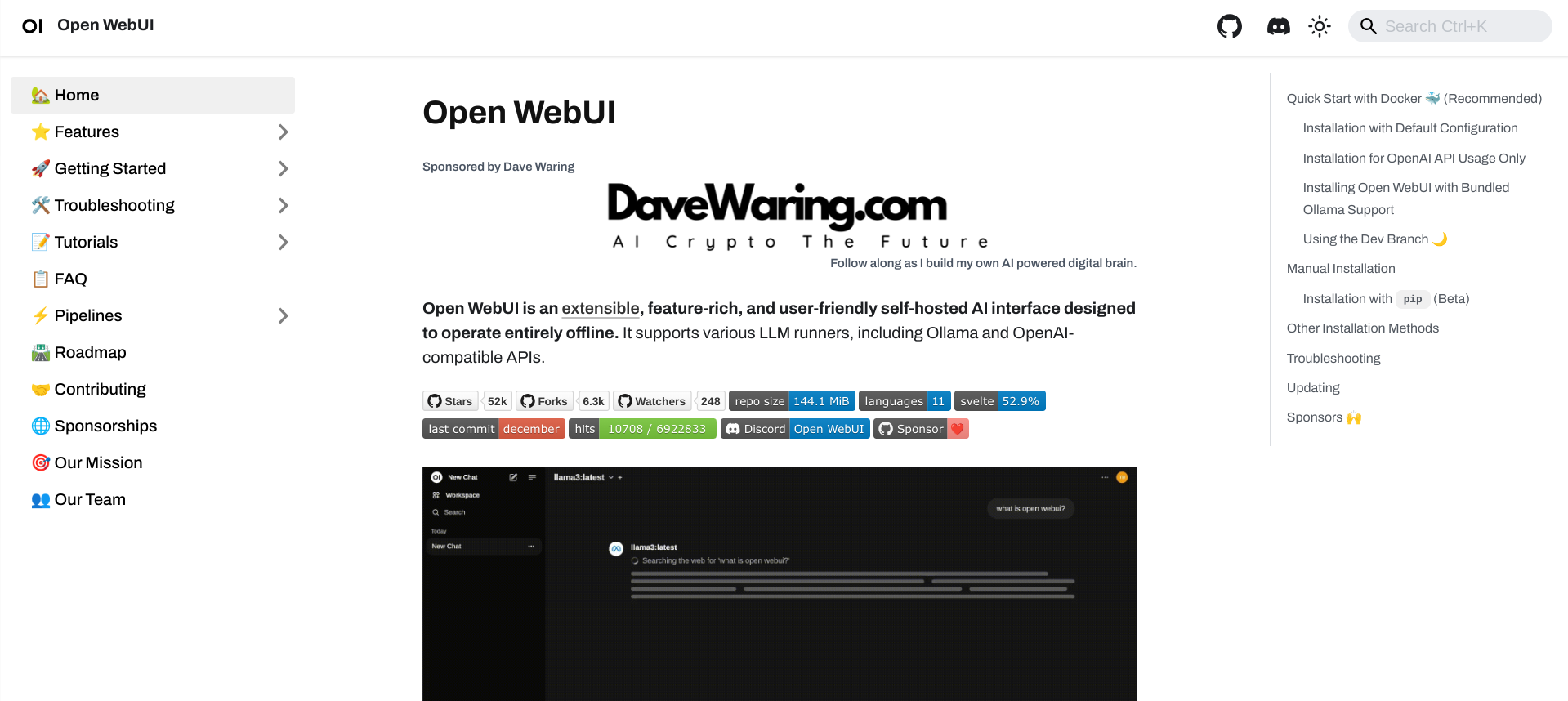

How to Use Ollama AI and Web UI

Here are the main steps for using Ollama AI and its Web UI:

- Installation: Begin by installing Docker on your machine. Then, clone the Open Web UI repository from GitHub and follow the setup instructions provided in the documentation.

- Running Models: After installation, you can run models using simple commands in the terminal (e.g.,

ollama run). This command initializes the model and allows for immediate interaction. - Accessing the Interface: Open your web browser and navigate to

localhost:3000to access the Ollama Web UI. Here, you can interact with your models through an intuitive chat interface. - Customization: Explore options for adding characters, uploading documents for context, and adjusting settings to personalize your experience.

- Engagement: Start chatting with your models by inputting prompts or using voice commands to generate responses.

Why is Ollama Different from Other Platforms?

Ollama AI stands out from other AI platforms for several reasons:

- Local Deployment: Unlike cloud-based solutions that require internet connectivity and data transfer to external servers, Ollama operates locally, providing greater control over data privacy.

- Cost-Effectiveness: Users can leverage powerful LLMs without incurring ongoing subscription fees associated with cloud services like ChatGPT.

- Customization Options: The ability to create modelfiles and customize chat interactions allows users to tailor their experiences significantly more than many standard chat interfaces offer.

- Community-Driven Development: Being open-source means that users can contribute to the platform's development, fostering a collaborative environment where enhancements are continuously integrated based on user feedback.

What if your design is more complex?

Ollama AI (and its Web UI) highlights a shift towards local AI solutions that prioritize user control, privacy, and customization. By allowing users to deploy LLMs on their own machines while providing an intuitive interface for interaction, Ollama presents a compelling alternative to traditional cloud-based AI platforms.

What if a designer wants to change the UI?

What if your product is more complex than a simple chat bar?

What if you need to embed that within a larger product designed on Figma?

This is why we built Polipo.

Curious to see what that means?

Start here.